Goals

A robust application for Benvision where low-vision users can use an iPhone to experience surroundings through a soundscape as they explore the world.

Opportunity to lead

I led the XR Design and development with 3 other team members (Soobin Ha, Patrick Burton & Lucas Thin) from Benvision.

Understanding the Challenge

For the visually impaired, navigating the world can be a challenge filled with uncertainty. Our goal was to provide a solution that not only aids in navigation but also enriches the perception of the world through sound.

Design Process

Identifying Needs

Through research and interaction with the visually impaired community, we identified the need for a more intuitive and enriching navigation aid.

Technological Integration

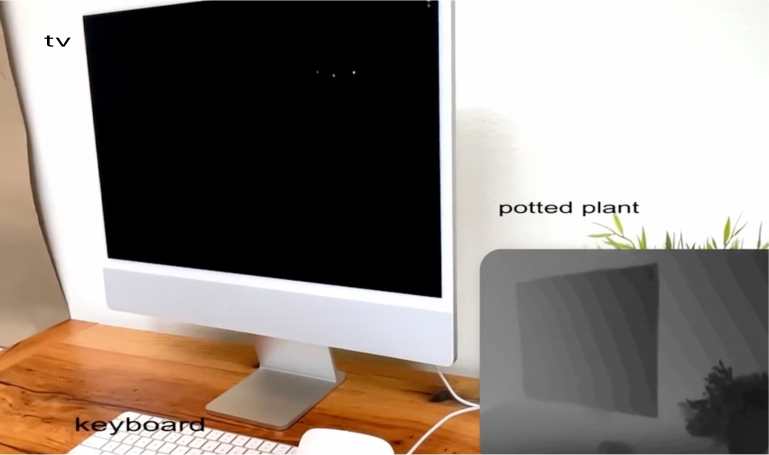

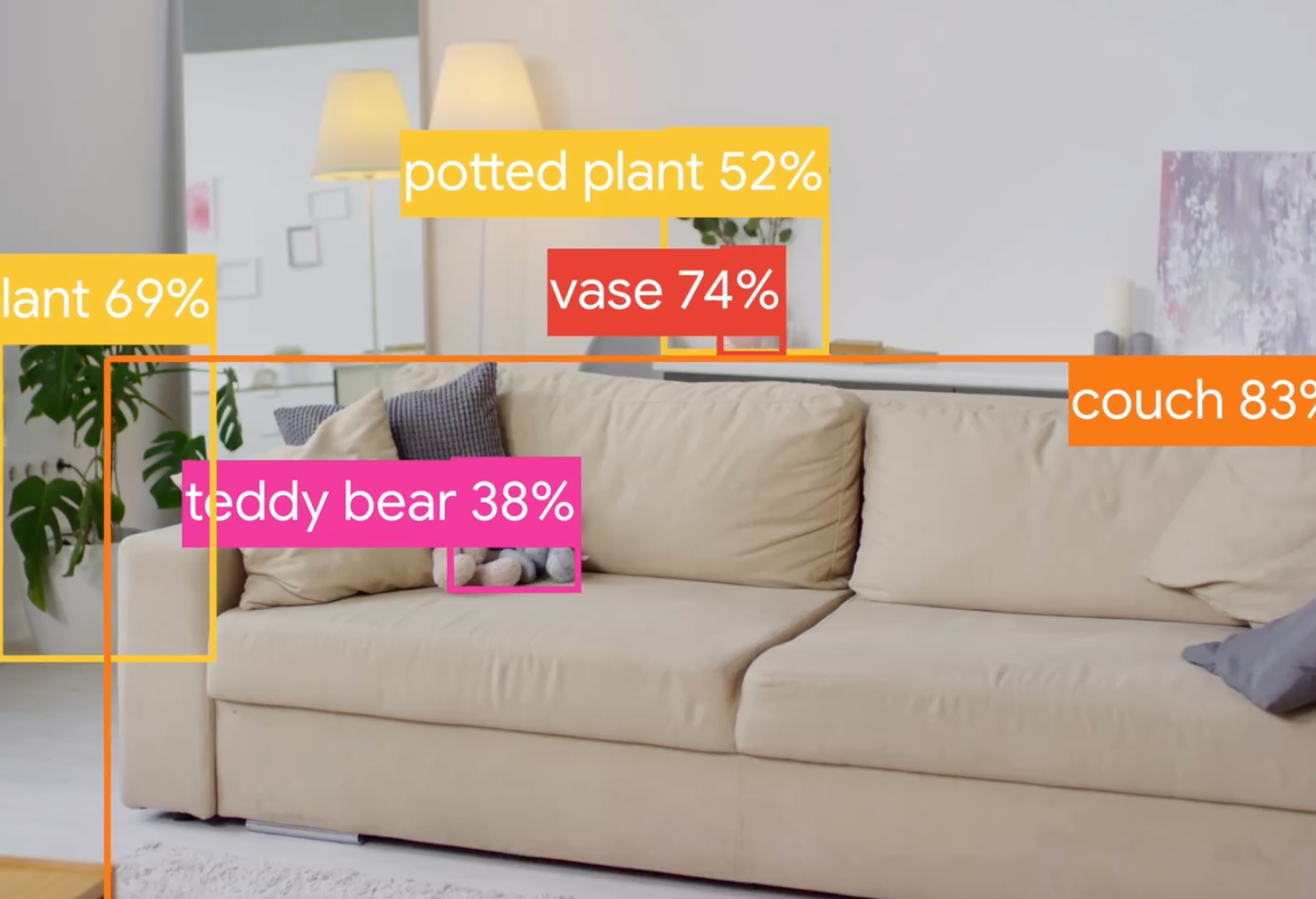

We built a unique computer vision algorithm capable of identifying and locating objects in the user's surroundings. By assigning musical cues to these objects and utilizing spatial audio, our prototype creates a rich auditory landscape.

Experience Design

The design process focused on ensuring that the interface and experience were accessible, intuitive, and emotionally engaging. Feedback from early testers, like Chris McNally, played a crucial role in refining BEN's functionality and user interface.

Built a real-time navigation app

We build an iOS application that creates spatial soundscapes for the objects around a user.